How we can prevent Nostr from ruining our day

Nostr has a problem with explicit content. Let's look at this from the perspective of a typical Nostr user.

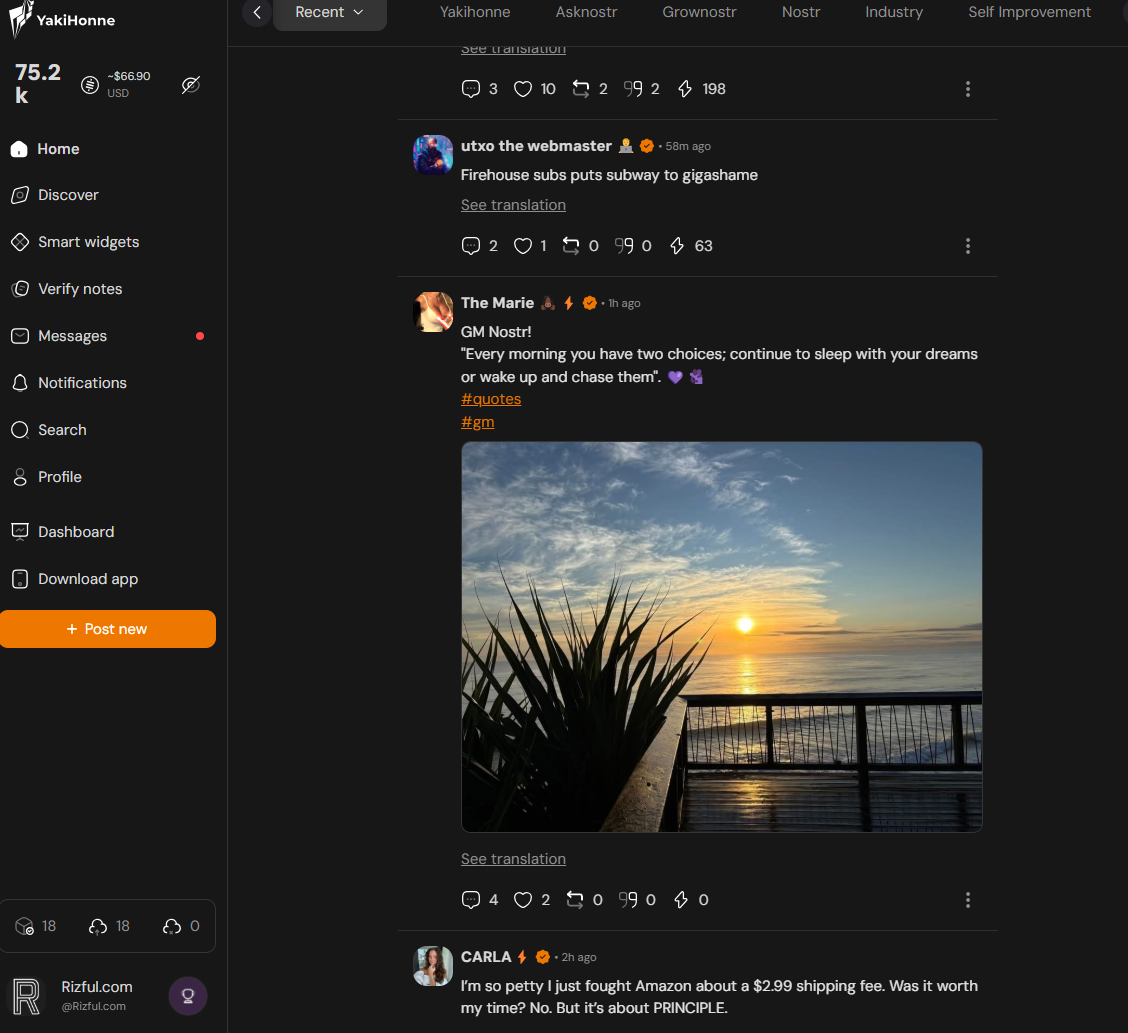

Above we can see my "recent" Nostr feed on the Yakihonne web application. This shows notes only from other Nostr users whom I have affirmatively "followed". For this reason, the odds of me encountering an image that ruins my day is actually low, and I don't think I need a service to scan images for me.

Here, on the other hand.....

This shows me navigating to the #asknostr hashtag. Looks fine, right?

Well, I can tell you: A few days ago I was looking at this very same hashtag, freshly awakened following my morning coffee, and I saw a bunch of posts which really ruined my day. Let's just say it wasn't "pornography" that I saw, it was much, much worse. Much worse.

So, what do we do about this?

Various solutions including: relying on community tagging, relying on "web of trust", or maybe even blacklisting certain image domains, have been proposed. But none of these will work at scale. When I go to the #asknostr hashtag, I really don't just want to see posts from my web of trust... I want to see all the posts! I want to see all the posts, except SPAM and images that will ruin my day.

The part where I quixotically attempt to fix this problem

After discussing a bit with FiatJaf, Hodlbod, and others in the community, this is currently the best solution I have come up with. It may be that someone has a better solution, if that's you -- please speak up.

On this page I will use the term "APPLICATION", which could be an intermediary service/api like that served by Yakihonne, Coracle, Primal, or Damus -- or it could actually be a relay -- running strfry or another relay implementation.

We start by the "APPLICATION" (again, could be the API of web or mobile app, could be a relay...) getting some sense of WHICH events should be prioritized for image safety scanning.

One trick: If you know that your users will often be checking popular hashtags like #asknostr or whatever, you can queue up these events to extract image URLS, and do this PERIODICALLY, like every few minutes... so when your user sits down and looks at #asknostr, all the recent events have already been scanned for NSFW images....

In fact -- if you are showing your users lists of events of popular hashtags like #asknostr ... then you need to DE-SPAM those events anyway. I suggest you DE-SPAM them, leaving you with a list of events you MIGHT want to show, and then... extract image URLs and test those image URLs for NSFW.

But: Most basically, you extract the image URLs from events, any way you choose, and get "scores" for images and video. The "score" is just a floating point number between 0 (zero) -- which signifies, "this is safe to show to Grandma".... and 1 (one), which signifies, "please don't show this to Grandma."

As it turns out, I'm not "all talk", and, despite adhering to a rigorous schedule of recreational drug use and winter sports, have now thrown together a pretty high-performance API which does exactly this.

API Docs

Making Requests

We start with a URL that you can use in your browser, like this:

https://nostr-media-alert.com/score?key=demo-api-key-heavily-rate-limited&url=https://rizful-public.s3.us-east-1.amazonaws.com/temp/known-safe-image.jpg

Let's dig in...

Base URL: https://nostr-media-alert.com/score

Query Parameter 1: key — Your API key. We'll provide one.

Query Parameter 2: url — The URL of the image or video you want to check.

Responses

We'll provide you with (hopefully) useful info about the URLs you submit. You should get a response to your HTTP request in under 5 seconds (for images) and under 25 seconds (for videos). In many cases, if we've seen the event in the past, you should get a response within about 250ms (1/4 of a second).

Here's an example response:

{

"message": "SUCCESS",

"score": 0.999

}

This response indicates that our machine learning models successfully processed your image or video URL. A score above 0.97, or so, suggests that you might not want to show this to Grandma.

{

"message": "INVALID MEDIA",

"score": null

}

This means we think the link you submitted is not a valid image or video URL. We're doing some magic to really check media files to be sure they are valid. If you submit a link, and you're really sure it's a valid media file, but you get this message, please let us know.

{

"message": "TIMEOUT",

"score": null

}

This means we tried to process your image or video URL, but it took too long, like longer than about 25 seconds. If this is a VIDEO you've sent, sometimes this is normal. You can just check again later... like wait another 20 seconds and try again with the same URL. If this is an IMAGE you sent, you should not see TIMEOUT -- if you see that, please contact us so we can fix it.

{

"message": "RATE LIMITED",

"score": null

}

Your API key is good for a certain number of simultaneous requests. If you see "RATE LIMITED", then you're a big boy, and you're making too many simultaneous requests. To upgrade to more simultaneous requests, please call 1-800-GOOGLE, or, alternatively, think positive thoughts.

OK, so you've made an API where I can test images and videos to make sure they won't ruin people's day

Yes. Sounds pretty centralized and uncool, right? I bet you next want to ask...

How can we do this in a cooler and more decentralized way?

Good question. I've thought of, and then discarded, many possible solutions.

My first clearly stupid idea

My first genius idea, arrived at after many vigorous bike rides and more than a few bong hits, was this: We're going to run a service that listens to ALL the relays and PRE-COMPUTES image scores for every image, and then publishes those image scores as Nostr events!

Cool, right? No. Not cool. If we did publish these scores, we'd basically be creating our own, public "pornography map to Nostr". That's not what we want to do, and this could be also legally a bit dangerous.

How about an open source plugin for strfry that does all this in a decentralized way?

Now this is definitely a non-stupid idea. And that brings me to my next hobby-horse....

Why this is kinda hard to do in a fully decentralized way

Generating the scores requires serious computing resources

It's not magic. I'll tell you how it works. To run our API, we have a web server that takes requests, looks to see if we've already scanned the image, and if we have already scanned it, returns the score of the image from our cache. (We don't actually store images, video, or even URLs... we just store a sha256 HASH of the url... and if you don't want to keep a database of URLs on your end, you should do the same... the SHA256 of a URL is secure against reverse-engineering and happens to be super fast in a database or caching layer...)

The reason our API can be blazingly fast (in many cases), is because SOMEONE ELSE BEFORE YOU has tried to scan this image or video, so we have a pre-computed score. Cool, right?

But let's say we DON'T have a precomputed score. This is where the lifting gets heavy.

Our web application hands off the image URL to a media processing server. This server (actually, it's multiple servers, all listening to the same queue...) needs a LOT of CPU and RAM --- in case you don't know, doing things like parsing big video files (or even big images) is processor-intensive, and furthermore, a service like this has to be able handle lots of simultaneous requests.

So sure, you need a lot CPU and RAM to get your media ready for "score generation" -- but that's not it.

The bandwidth needs are HIGH, because either you have ACTUALLY DOWNLOAD THE WHOLE MEDIA FILE or, if you are smart (like we are), you figure out a way to NOT download the whole media file... but in any case, you're definitely doing some downloading, actually a LOT of downloading.

And that's not the end. Finally, after you have processed the image or video, you have to show it to a machine learning model to get "the score". This requires... wait for it... GPUs. Potentially lots of GPUs, depending on how many images you want to "score" at the same time.

So what are you getting at?

Basically -- you do NOT want to "score images" on the same machine that you use to run your APPLICATION (relay, next.js app, express app, whatever). That would be bad because "image scoring" is highly CPU/RAM/GPU intensive, and you really need to make sure when there are spikes in activity, that there aren't other processes running on the same server that could be interrupted or "slow to a crawl"....

And not only that... if you want your system to be low-latency, you really need a distributed architecture where the computers doing the partial downloading & image processing are NOT the same computers that do your "score generation" (also called "machine learning inference").

So you need a distributed service, everybody's favorite thing to build. Our favorite method to do this is using RabbitMQ or SQS, but others might have newer, fancier ways to do this.

AND -- the bandwidth thing is the kicker. If you wanted to, for example, score every image that appears in every event on the Nostr network... you would need a TON of bandwidth, and it would be horribly inefficient if EACH APPLICATION did their own downloading and scoring!

I don't care about any of that. What I want to know is: Are your scores accurate?

The word "accurate" is tough here. NSFW is a subjective question. But yes, every time we've done a test, and showed our API an image of a puppy, it scores really low. When show our API an image or video of something bad, it scores really high. And pretty much everything that scores above about 0.97 (0.95 maybe, for Mormons), should also be considered BAD.

Fine. But which machine learning model are you using?

Good question -- and this is another problem with making a service like this open-source. Ours is the first service like this for Nostr, but there should be others, and ANYONE who builds a service like this should....

- Never reveal which models we are using. This is an adversarial situation, where bad people could try to figure out ways to reduce scores on their bad images. We need to keep them guessing.

- When there is an open-source solution, it should NOT recommend a particular machine-learning model to use to generate the scores. Each service should be using a DIFFERENT blend of models.

Could I use this API with a strfry plugin?

Yes, could be interesting. Here are the relevant docs: https://github.com/hoytech/strfry/blob/master/docs/plugins.md

If you're interested using this API from strfy, let us know, we'll likely build a plugin.

Your idea is bad and I have a better way to do this.

Great, please let us know.